Understanding “Page Not Indexed” Issues in Google Search Console

By: Ed Harris / Published: April 2, 2025 / Last updated: April 2, 2025

Google Search Console is a great, free tool that provides a bunch of insights into how Google’s bots are discovering, crawling, and hopefully indexing URLs on your website. If you’re just getting started, I’ve shared instructions to help you set up a Search Console property for your website here.

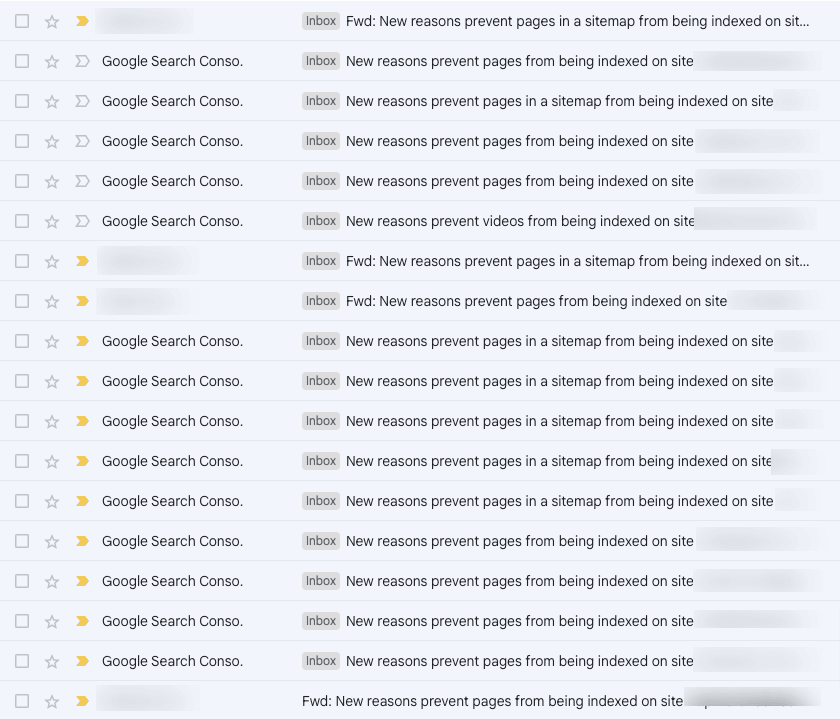

Once you have a Google Search Console property set up, you will receive regular email alerts about what’s going on with your website. Sometimes these will be happy “Congratulations on reaching 10k clicks last month” 🎉 type emails.

But most of the time, the emails come with a subject line like this:

“New reasons prevent pages from being indexed on site www.yourwebsite.org”

Since I have access to many of my clients’ Search Console properties, I often end up with an inbox that looks like this:

These emails often cause alarm, and give users the impression something is seriously wrong with their website. (In fact, clients often forward these emails to me, as you can see in the screenshot above.)

In reality, these emails are simply Google’s automated systems alerting you that Google can’t (or won’t) index a URL that it has previously discovered or crawled. Sometimes this is a bad thing, but often it’s not. You can’t ignore these emails altogether, but it’s not necessarily bad news.

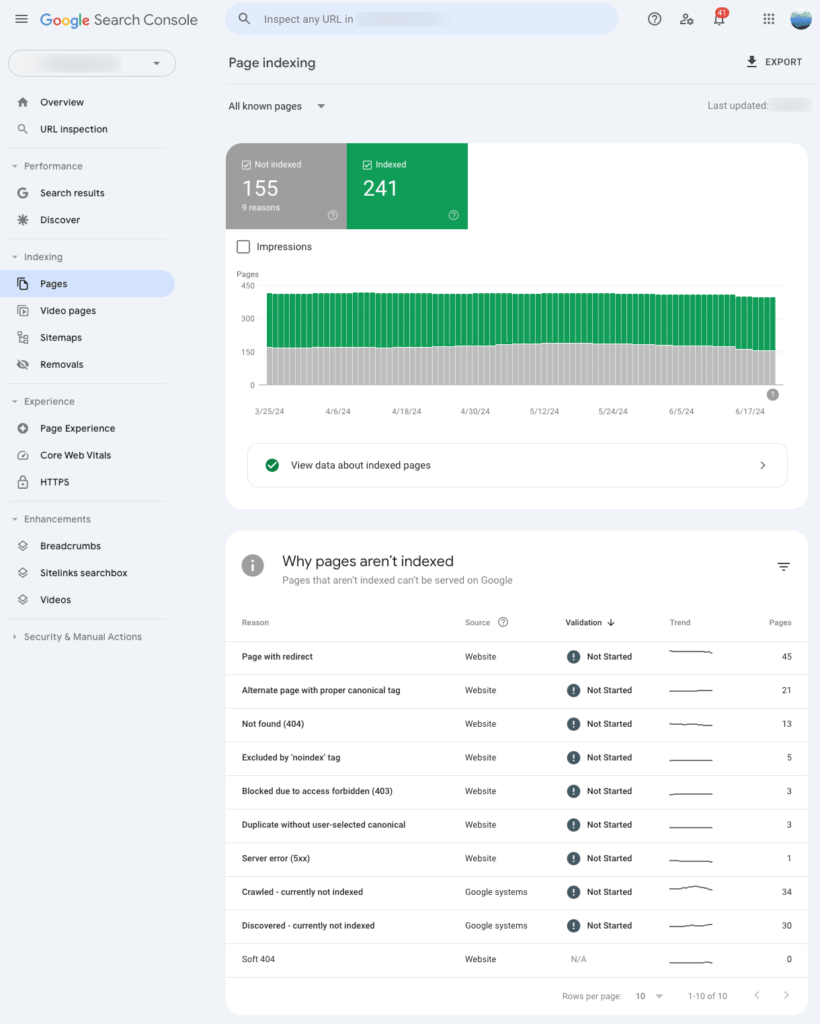

To determine whether there are any issues that need attention, you’ll need to look at the Page Indexing report in your Google Search Console property. It looks like this:

Below the chart, you’ll see a table with rows for each of the reasons that pages on the website aren’t included in Google’s index. You can click on each row to view some examples of the URLs included. And if you have taken action to correct an issue, you can click on the Validate Fix button to request that Google re-evaluate those URLs.

Again, in many cases, it is not a problem that a URL is not indexed. But in some cases, there may be issues to fix. Keep reading for explanations and issues to watch out for in some of the most popular reasons-a-page-isn’t-indexed categories.

Before we jump into the reasons pages aren’t indexed, I want to share a couple more pieces of important background information to help all of this make sense.

Disclosure: some of the links below are affiliate links, meaning that at no cost to you, we will earn a commission if you click through and make a purchase. Learn more about the products and services we recommend here.

What’s an HTTP Status Code?

In the sections below you’ll see references to three-digit numeric codes like 404 or 301 or 302: these are HTTP status codes. When a client (a human user with a browser, OR Google’s crawler) makes a request to a server for a specific URL, the server returns an HTTP status code. Understanding these codes is helpful when interpreting Google Search Console data! View a list of HTTP status codes here.

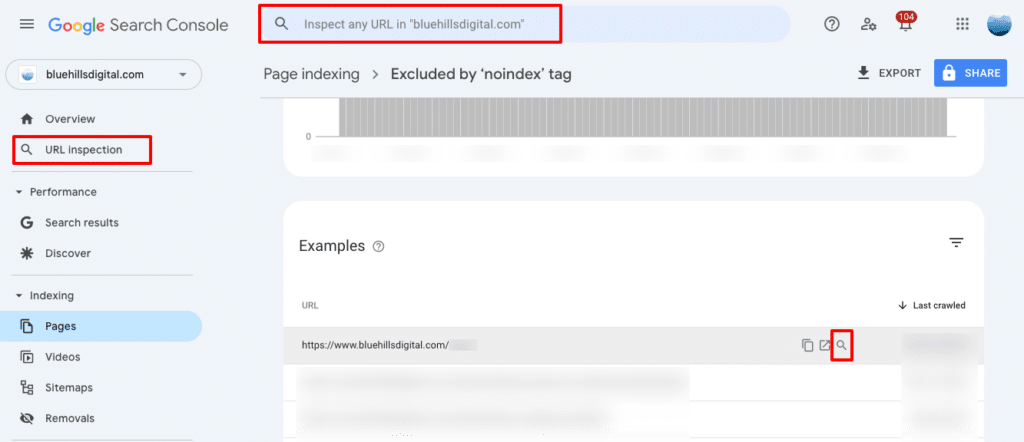

Where is the URL Inspection Tool?

Google Search Console includes a URL Inspection Tool, which you can use to see how Google understands one specific URL. You can access the tool in three places (highlighted in the image below):

- In the left sidebar of the Search Console interface

- By entering a URL in the search field at the top of the Search Console interface

- For a specific URL in a report by hovering over the URL and clicking the magnifying glass icon that appears

Page Not Indexed Reasons

Server error (5xx)

Your server returned a 500-level error when the bot tried to crawl the page, either because the request timed out, or your site was too busy. These errors are often temporary – your site may have been temporarily offline because of maintenance or an issue with your website host. You can use the URL inspection tool (available in the left navigation of Google Search Console) to test the URLs to make sure the error has resolved, and then use the Validate Fix button to request re-indexing.

Page with redirect

This reason shows up for a URL that is redirected to another URL. For this reason, the initial URL will not be included in Google’s index. There are a few situations in which URLs are redirected:

- You have set up a temporary or permanent 3xx redirect rule for the URL (perhaps because the the page slug changed, or because the URL structure of a subdirectory changed)

- The URL includes the www prefix when your website uses the non-www version as the canonical (preferred) version of all URLs.

- The URL is an insecure version using http, instead of https (assuming your website is following best practices and redirects all insecure http requests to the https version of the URL)

In all of the cases described above, you wouldn’t want the initial URL that is subject to the redirect to be indexed. Instead, the URL that should be indexed is the one the redirect rule points to.

However, reviewing this report may reveal situations where you are using the wrong URL in internal links, resulting in Google crawling URLs that it doesn’t need to. For example, if your website contains lots of links to blog posts in a …/blog/… subdirectory and you recently changed that subdirectory structure to …/news/… and implemented a redirect rule, you will see all those old internal links show up as “pages with redirects”.

Redirect error

This category is different to the “page with redirect” category above. In this case, the URL resulted in one of the following redirect errors:

- A redirect chain that was too long (one URL redirected to another, and another, and another …)

- A redirect loop (where multiple chained redirect rules eventually point back to the initial URL

- A redirect URL that eventually exceeded the maximum URL length

- An empty or invalid URL in a redirect chain

To understand a specific URL’s redirect error, you can use the network tab in Chrome DevTools to get more details about the page load, or use a website audit tool like Semrush’s Site Audit to identify URLs with redirect chains (if the initial URL is on your own website).

Excluded by ‘noindex’ tag

If you want to signal that a URL should not be included in search results, you can add a “noindex” tag to the page header. This is typically a setting you can toggle on in a website builder’s page settings, or made available by SEO plugins like Yoast for WordPress websites.

URLs that are not indexed because the URL is marked “noindex” are exactly that. Hopefully the URLs you see in this section of the report are URLs that you intentionally applied the noindex tag to. These might include pages like your Privacy Policy, or confirmation pages that a user sees after submitting a form.

If you see pages in this report that SHOULD be indexed, then you need to go and remove the noindex tag! Once you have removed the noindex tag, use the Validate Fix button to request that Google reevaluate these URLs. Alternatively if you only needed to fix this issues for one or two URLs, you can use the URL inspection tool to request re-indexing.

URL blocked by robots.txt

In this case, URL indexing was blocked by a rule in your website’s robots.txt file. (Learn more about robots.txt files here.) If you want to view the contents of your website’s robots.txt file, go to Settings in your Search Console property and click on Open Report next to the Crawling > robots.txt section. If a URL that should be indexed is blocked by a rule in the robots.txt file, you may need to work with your website developer to adjust the file contents, or adjust the settings in relevant website plugins that inject content into the robots.txt file.

Note that trying to prevent page indexing through the robots.txt file is not the best approach. If you don’t want a URL to be indexed, using a noindex tag is preferable.

Not found (404)

In this case, the URL does not lead to a webpage. Instead, the URL returns a 404 “page not found” error message. Some websites may handle this gracefully, with a well-designed 404 page template. Other’s might just show an ugly error message. Either way, Google can’t index the URL because there’s nothing there.

This might lead you to ask, how did Google discover the URL? The most likely options are:

- The URL is published as a link somewhere (either on your site or an external site)

- The URL used to point to a real page, but that page has since been deleted and no 3xx redirect rule exists

The best fix for these URLs is either to update them at the source if possible. If links like these exist on your own site, a tool like the Semrush Site Health Audit will identify them as “Broken Links”, allowing you to fix them.

If you realize you deleted a URL and should have created a 301 redirect rule because the content moved, go ahead and add the redirect rule. If you deleted the content permanently and it did not move, a 404 or 410 error code is the appropriate response.

Soft 404

A “soft 404 error” happens when the server sends a 200 OK status for the requested page, but Google thinks that the page should return a 404 “not found” error instead. It may do this if the page content looks like an error, or if there’s no content.

Blocked due to unauthorized request (401)

A 401 status code occurs when authorization is required to load a URL. If there are URLs in this report that should be accessible for Google to index, make sure that any authorization requests are removed and then use the Validate Fix or URL inspection tools to request re-indexing.

It is also possible that server access control settings are deliberately blocking Googlebot from crawling URLs, even though there is no authorization or password protection in place. If you suspect this is the case, talk with your website hosting company to identify whether Googlebot specifically is being blocked.

Blocked due to access forbidden (403)

A 403 status code occurs when a user agent provided credentials when a URL requires authorization, but was not granted access. Unlike real users, Googlebot does not provide credentials, so this response is typically error that you can address with your website host or developer if the URLs in the report should be accessible for indexing.

If you do want Googlebot to index this page, you should either switch to admitting users without authentication, or explicitly allow Googlebot requests without authentication (though you should verify its identity).

URL blocked due to other 4xx issue

This category is a catch-all for any other 4xx status code that is not covered above. You can use the URL inspection tool or Chrome DevTools to better understand what status code is being returned.

Crawled – currently not indexed

This category captures URLs that have been discovered by Google AND then crawled, but have not yet been included in the index. If you see URLs in this section that you want to be included in the index, first, use the URL inspection tool to confirm that the report is correct. When you inspect an individual URL, you may find that it IS in fact indexed. Google has acknowledged that there can be discrepancies between these two data sources (which is frustrating!)

When you’ve confirmed these URLs truly are not indexed, look for patterns in the URLs listed. If they share some common characteristics – like a common page template or content type – this might point to quality issues, where Google doesn’t perceive the URLs to be high enough quality to index. Make sure that the content is high quality, not duplicative, and has robust linking to other content on your website.

Discovered – currently not indexed

This category captures URLs that Google has discovered but hasn’t crawled yet. You can expect these URLs to be crawled in future, and either indexed, or moved into one of the other categories described on this page.

If this explanation doesn’t make sense (the URL was not recently published), use the URL inspection tool to look for other potential issues that need to be addressed.

Alternate page with proper canonical tag

Google has determined that these URLs are alternates of other URLs on your website. Typically, alternate pages are those designed for specific devices (like a version of a page specifically for mobile devices), or translated versions for different languages. There is a specific alternate tag applied in the header of these pages that points to the URL of the authoritative or canonical page, which is indexed, so no action is needed.

Duplicate without user-selected canonical

These pages have been determined to be duplicates of other pages, but don’t have a tag in the page header that points to the authoritative, canonical version. When crawling both pages, Google has selected the other page as the canonical page, so this one will not show in search results.

You can use the URL inspection tool to see which other URL Google has selected as the canonical page. If you think Google has chosen the wrong URL as the canonical version, you can explicitly indicate the correct version by adding a valid canonical tag. If the two pages are not duplicates, make sure the content is substantially different between the two pages.

Duplicate, Google chose different canonical than user

Finally, this category is for URLs where even though you indicated that one page is the canonical version of a duplicate, Google has determined that a different page is a better canonical page!

You can use the URL inspection tool to see which page Google selected as the better canonical option, compared to the “user-selected canonical”.

It can be tricky to resolve this problem. It’s important to try to avoid serving multiple pages that are so similar that Google might see them as duplicates. You can try adding explicit, self-referencing canonical tags to all pages across your website, although Google only sees these as hints and may ignore them (as seen in this URL category). The best approach is to avoid anything close to duplicate content.

How to find broken links and other website health issues

While Google Search Console reveals a lot of information about how Google understands the URLs on your website AND links pointing to your website, you may still need more help figuring out how to fix the issues above.

For example, Search Console can tell you that certain URLs are returning 404 errors, but it can’t provide a list of all the other pages on your website that may contain that broken link.

Another example: Search Console can’t help you identify chained redirect rules, where a request for a URL has to pass through multiple redirect rules before reaching a final destination.

Website audit tools like Semrush, SE Ranking, Screaming Frog, and Sitebulb are all options to look at to answer these questions. Some are browser based (like Semrush and SE Ranking), and others are software you download and run from your computer (like Screaming Frog and Sitebulb). In all cases, you can set up a scan of your website which will return detailed information about errors on the site that may need attention.